Inspired by Pavlov, Researchers Use Light to Bring Classical Conditioning to AI

A team at the University of Oxford drew inspiration from Pavlov's dog to design a photonics-based neural network.

Inspired by Pavlov's classical conditioning experiments in the early 19th century, researchers at the University of Oxford recently created an on-chip optical processor that may open doors to unprecedented advancements in artificial intelligence (AI) and machine learning (ML).

Supervised learning of the on-chip hardware. Image used courtesy of Optica and Tan et al

Oxford claims its new system offers advanced dataset similarity detection. Unlike conventional machine learning algorithms that run on electronic processors and traditional neural networks, Oxford's system runs on a backpropagation-free photonic network and leverages Pavlovian associative learning.

Finding Inspiration in Pavlov's Dog

Classical conditioning is the process of associating two sensory stimuli to achieve an identical response. This process includes sensory and motor neurons. When the sensory neurons receive sensory signals, the motor neurons generate sensory-intensive actions.

Ivan Pavlov, who discovered this concept in the early 1900s, observed that he could induce salivation in a dog when he rang a bell by teaching the dog to associate the sound of the bell with food. The associative learning process associates stimulus s2 (i.e., bell sound) with a natural stimulus s1 (i.e., sight or smell of food) to trigger an identical response (i.e., salivation) in the dog.

Pavlovian associative learning. Image used courtesy of Optica and Tan et al

Researchers at the University of Oxford applied this concept to simplified neural circuitry with two key roles: 1) Converge and associate two inputs and 2) store the memories of these associations for later reference. Central to this research is something called an associative monadic learning element (AMLE). An AMLE includes a device that efficiently carries out the basic associative learning process of classical conditioning to advance AI/ML.

About the Associative Monadic Learning Element

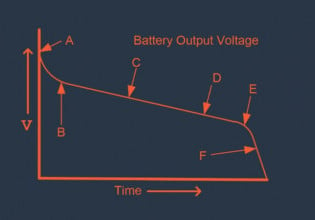

An AMLE integrates a thin film of phase-change material with two coupled waveguides to achieve associative learning. The material (Ge2Sb2Te5 (GST)) effectively modulates the coupling between the waveguides. GST exists in two states, amorphous or crystalline, impacting the amount of coupling between the waveguides.

In the crystalline state, stimuli s1 and s2 show no form of association. However, the stimuli (or inputs) begin to associate when they arrive simultaneously, resulting in an amorphized GST. The more the GST amorphizes, the more stimuli s1 and s2 associate, resulting in a nearly indistinguishable output, known as the learning threshold. The AMLE uses photonic associative learning to deliver a unique machine learning framework that addresses general learning tasks.

Electric field profiles of AMLE before and after learning. Image used courtesy of Optica and Tan et al

AMLEs Eliminate Backpropagation, Boost Computational Speed

Traditional neural network-intensive AI systems require significant datasets during the learning process, which leads to increased processing and computational costs. These conventional neural networks leverage backpropagation to achieve high-accuracy AI learning.

According to the University of Oxford, AMLE eliminates the need for backpropagation, using memory material to learn patterns and associate similar features in datasets. The backpropagation-free technology results in faster AI/ML model training. For instance, while conventional neural network-based AI systems recognize a rabbit after training its model with up to 10,000 rabbit/non-rabbit images, the backpropagation-free technology (AMLE) can achieve similar results with five rabbit/non-rabbit image pairs at significantly reduced processing and computational costs.

Images testing whether the network can classify cat- and non-cat images. Image used courtesy of Optica and Tan et al

The AMLE also leverages wavelength-division multiplexing for faster computational speeds. This capability allows AMLE to send several optical signals on various single channel-based wavelengths, eliminating backpropagation. AMLE uses light to send and receive data, a process called parallel signal processing, resulting in higher information density and faster pattern recognition speed.

While this newly-developed design can not outrightly substitute conventional neural networks, it can supplement them, according to Professor Cheng, a co-author of the AMLE research. In high volume and simpler dataset learning tasks, the AMLE device significantly speeds up optical processing.

Building AMLE on a Photonic Platform

The researchers at the University of Oxford implemented an AMLE on a photonic platform. Using this platform, the team showed the viability and effectiveness of a single-layer-weight artificial neural network architecture free from backpropagation.

The researchers observed that correlating two different inputs can generate a similar output if the inputs are simultaneously applied at an already established optical delay. The association between these inputs could allow the association of multiple data streams, including various wavelengths over a single element—particularly in the absence of light signal interferences.

According to the University of Oxford, this research may set the precedence for next-gen machine learning algorithms and architecture innovations.